Research Opportunities

The Perception, Cognition, and Action Lab is recruiting its first generation of scientists! Learn how you can get involved

Research

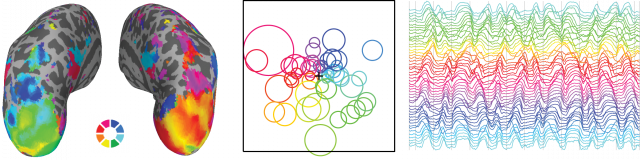

The Perception, Cognition, and Action (PCA) Lab seeks to understand how neural systems support the maintenance and manipulation of neural representations in the service of behavior. What happens to visual stimulus representations across different cortical regions as our task demands are updated? How do broad networks across visual, parietal, and frontal cortex collectively support representations of items no longer directly in view? What types of changes in neural response properties can account for observed changes in stimulus representations across task conditions?

We study various aspects of visual cognition using computational neuroimaging techniques, including multivariate stimulus reconstruction and univariate response modeling, while appreciating the nuanced view afforded by using complementary neural measurements to constrain our understanding.

Visual Cognition

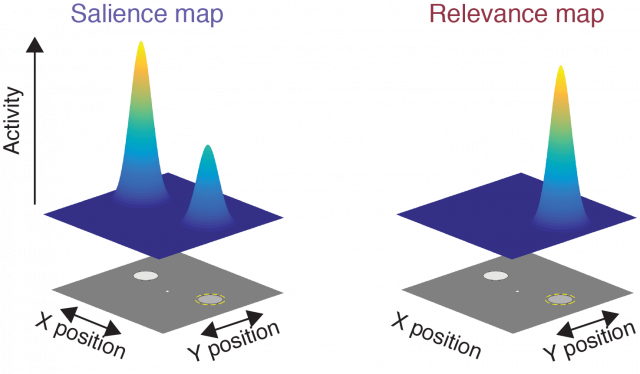

We use the visual system as a tractable canvas for exploring questions about how neural systems encode, maintain, and manipulate representations of information. We take advantage of the well-characterized architecture of the human visual system, including its large-scale retinotopic organization and feature-selective response properties, as a tool for probing fundamental cognitive processes, such as attention and working memory. Each of these processes has been well-formalized within the visual system, enabling rigorous tests of grounded theoretical frameworks. For example, a prominent theoretical framework posits that the visual system maintains a ‘priority map’ which indexes the relative importance of different objects in the scene. The priority map guides visual behavior, such as reaches and eye movements, which in turn update the priority map. To test predictions from and develop extensions to this theory, we test how different manipulations of the visual stimulus (its contrast, its distribution of colors, etc) and behavioral task (probability that one item is important, task difficulty) impact behavioral and neural measures of visual representations.

Computational Neuroimaging

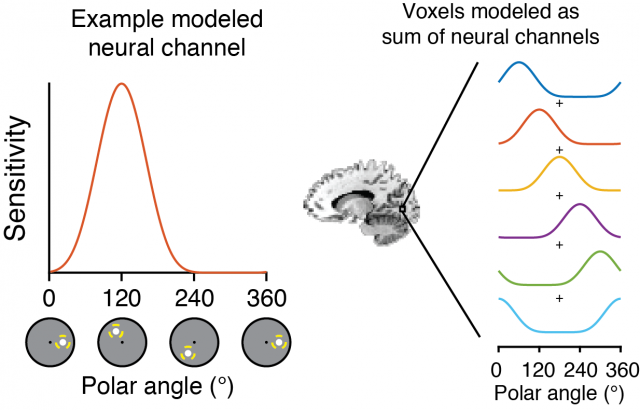

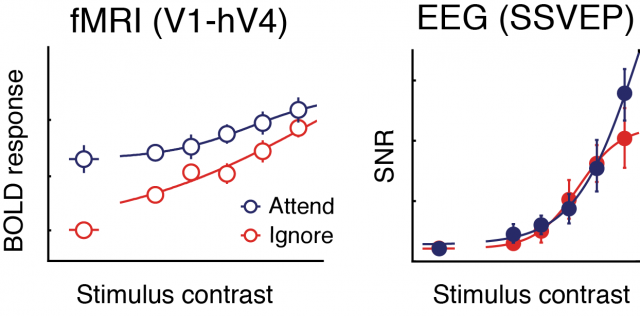

Our goal is to build useful models of neural function based on animal experiments that allow us to quantify properties neural representations as a function of visual stimulus features and behavioral task demands. One class of these models is the ‘inverted encoding model’, a simplified neural encoding model which can be used to ‘reconstruct’ representations of stimulus features from measured neural activation patterns. We apply these methods, in conjunction with other approaches including measuring single-voxel receptive fields and steady-state visual EEG responses, to track the dynamics of neural stimulus representations across task manipulations. In some projects, the goal is to use simplified models to compare neural representations across conditions, and in others, the goal is to compare how models of visual cognition best account for neural signals. Across all our studies we strive for analytic transparency and reproducibility. Moreover, we believe science operates best when we all work together and build off each others’ ideas. As such, all data and analysis code for published reports is available on our lab’s web site, and Dr. Sprague’s Open Science Framework profile and GitHub page.

Complementary Neural Measurements

The brain operates across vast spatial and temporal scales – action potentials within single neurons occur at the millisecond timescale, while large-scale network transitions happen at the second timescale. We assay neural signals at a variety of temporal and spatial scales with an additional focus on the complementary physiological resolution that each method affords. We use advanced high-sampling-rate functional MRI techniques available at the UCSB Brain Imaging Center to sample neural activation patterns across the entire brain at ~1-2 Hz. Within fMRI experiments, we employ both univariate analyses which quantify responses in each region of the brain, as well as multivariate computational neuroimaging approaches that assay the information carried by activation patterns within these brain regions. Additionally, we use several electroencephalogram (EEG) techniques, including characterization of oscillatory frequency bands (typically activity in the alpha band), event-related potentials, and steady-state visually-evoked potentials (SSVEP) – ‘tagged’ neural activity which follows the temporal frequency of a visual stimulus. In addition to providing complementary spatial and temporal resolutions, these tools also index different properties of the generating neural responses. With these complementary measurements in hand, we can better hone-in on computational models of the fundamental neural mechanisms supporting visual cognition.

Funding

Our work is supported by generous grants from:

UCSB Academic Senate

Alfred P Sloan Foundation (Fellowship to Prof Sprague)

National Institutes of Health - National Eye Institute (R01-EY035300)

Army Research Labs, via a cooperative agreement with the Institute for Collaborative Biotechnologies

NVidia (hardware grant)